Revolutionizing NOC Operations

for the Telecom Industry

- Company

- Domain

- Service

- Technology

- Platform

Introduction

“The Pace Of Evolution In IT, Their Experience Of Working In Environments With An Absence Of Fully Functioning Network Capabilities”

Problem Statement

Simplifying NOC Operations and network states through automated, deep insight driven AI-ML systems

Overview

Auto suggestions for ticket key metrics

For reduced human error and analysis of assigned values

Automated alarm correlation and RCA

For faster alarm detection

Digitally managed KPIs and inventory insights

For a holistic view of network corresponding alarms

“Common Challenges And Network Problems From An NOC Operator’s Perspective, The Role Of AI And ML In Enabling Agile, Multitask-Able Environments”

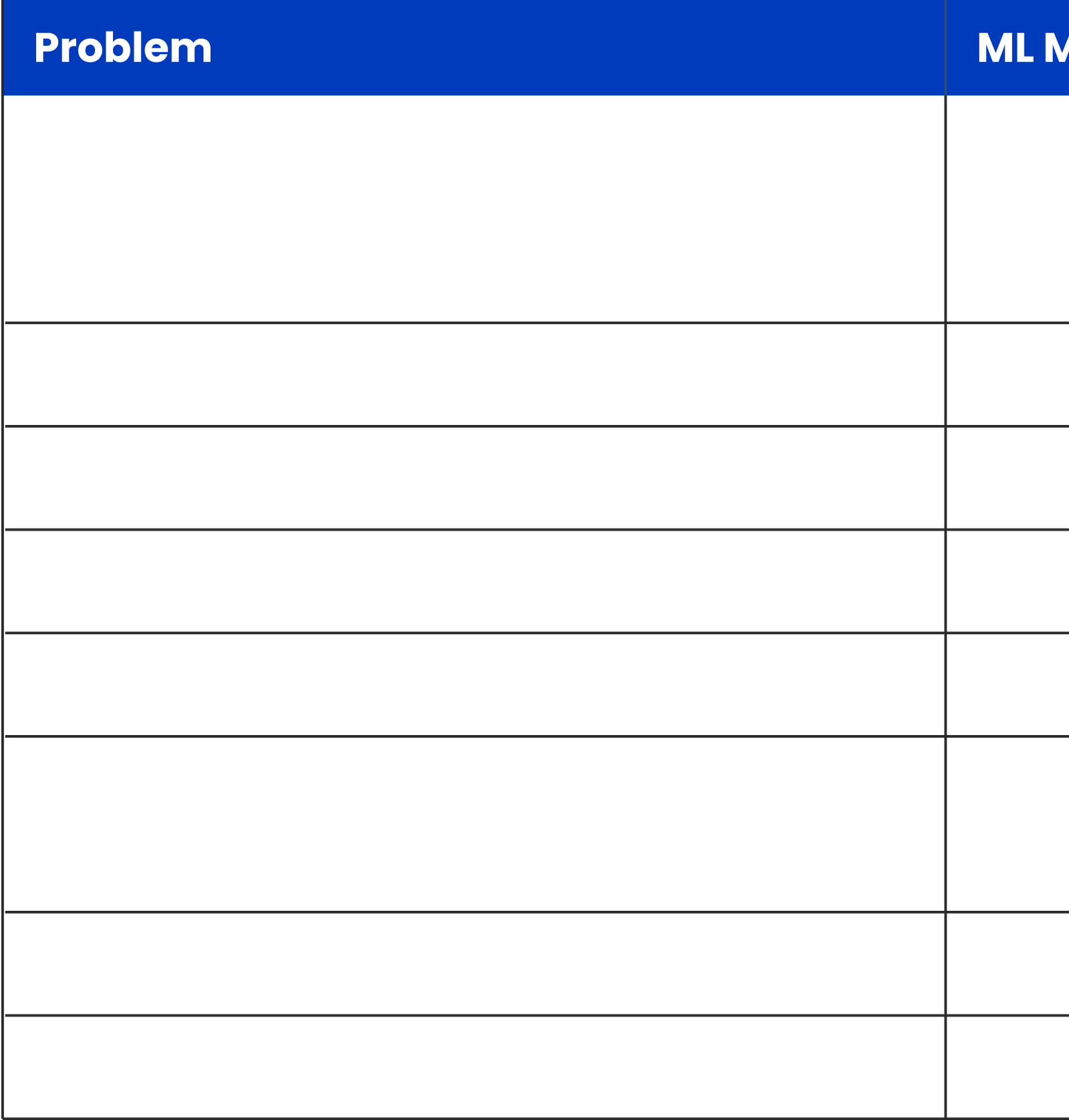

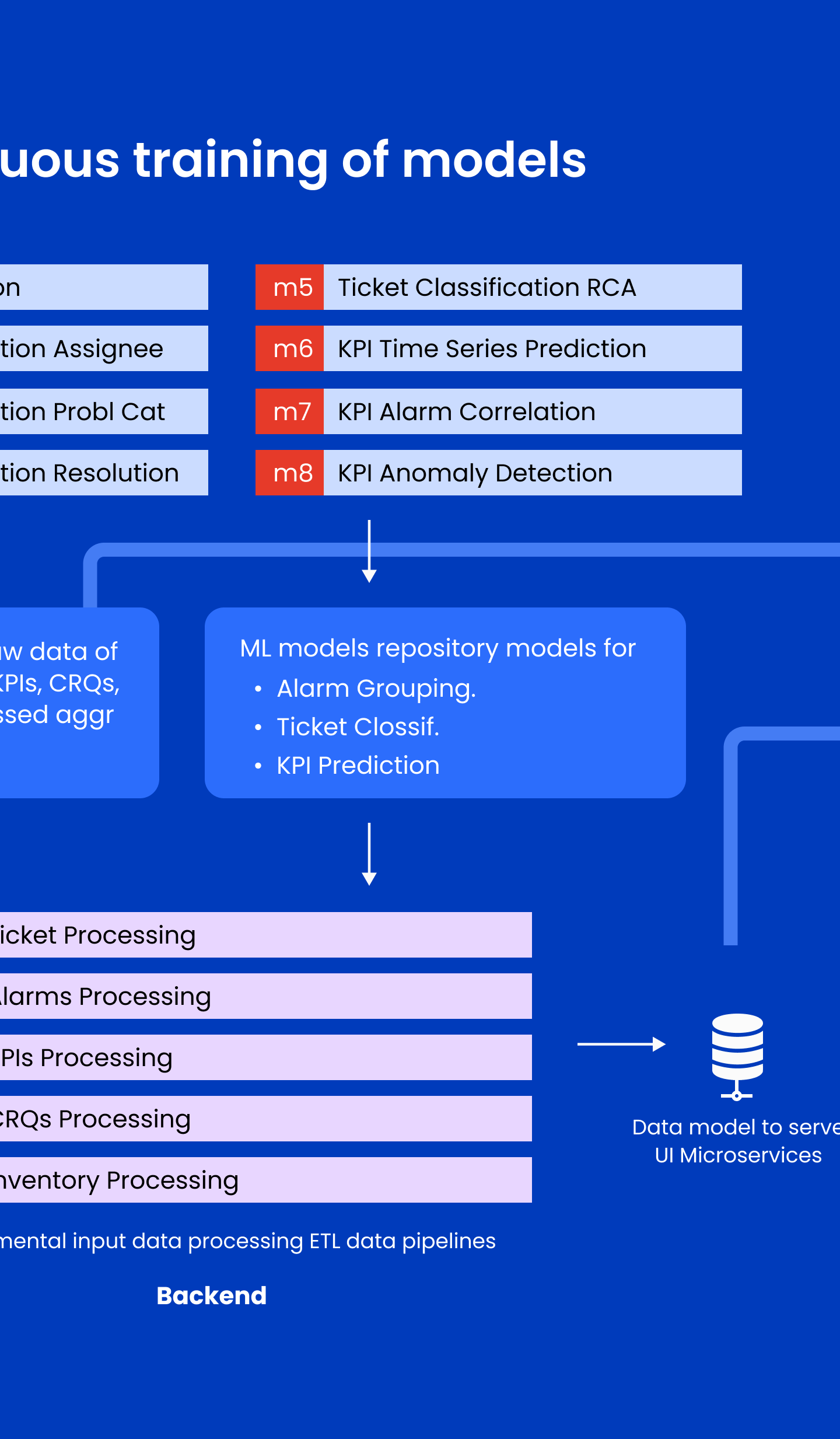

AI-ML Approaches top level view

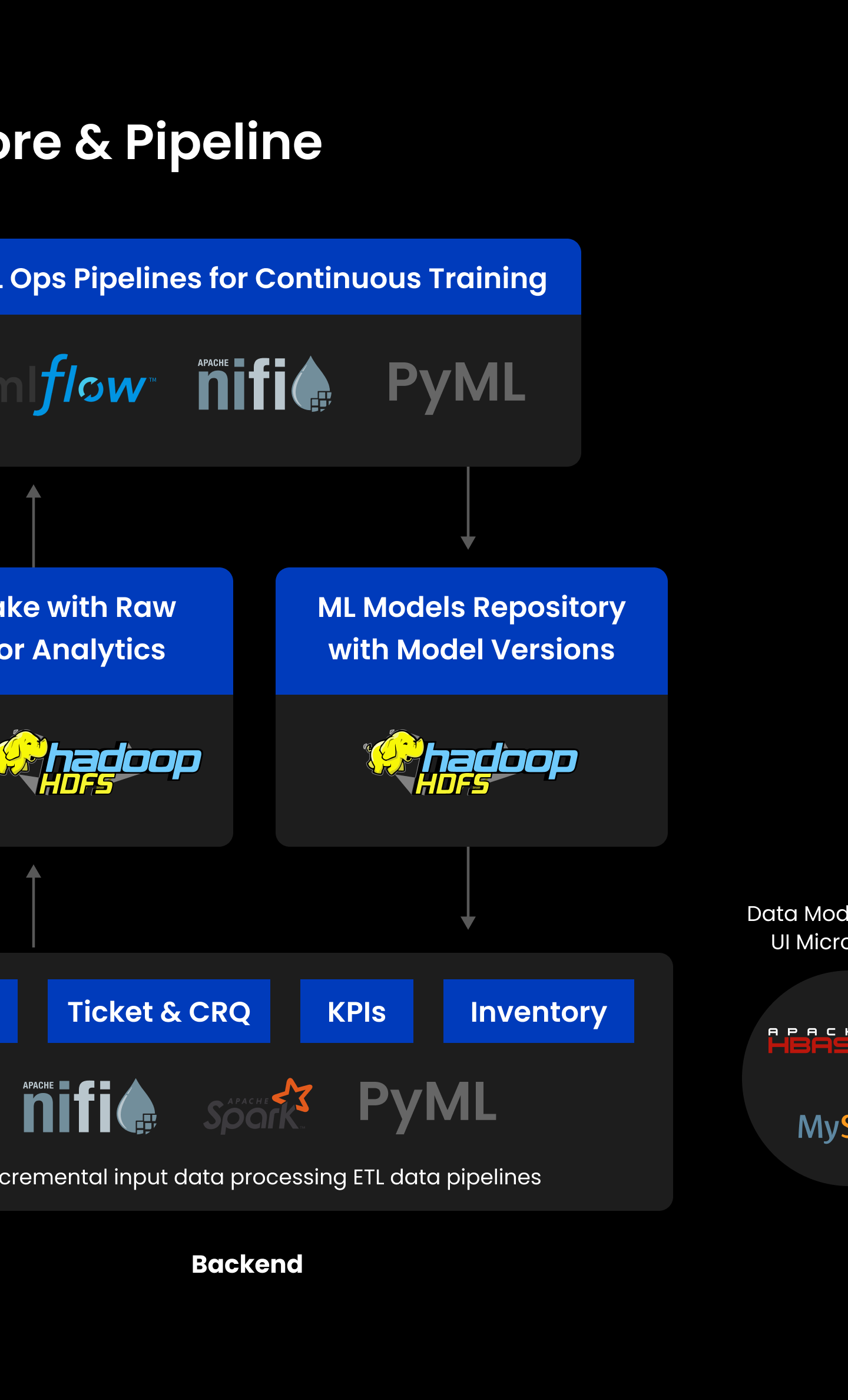

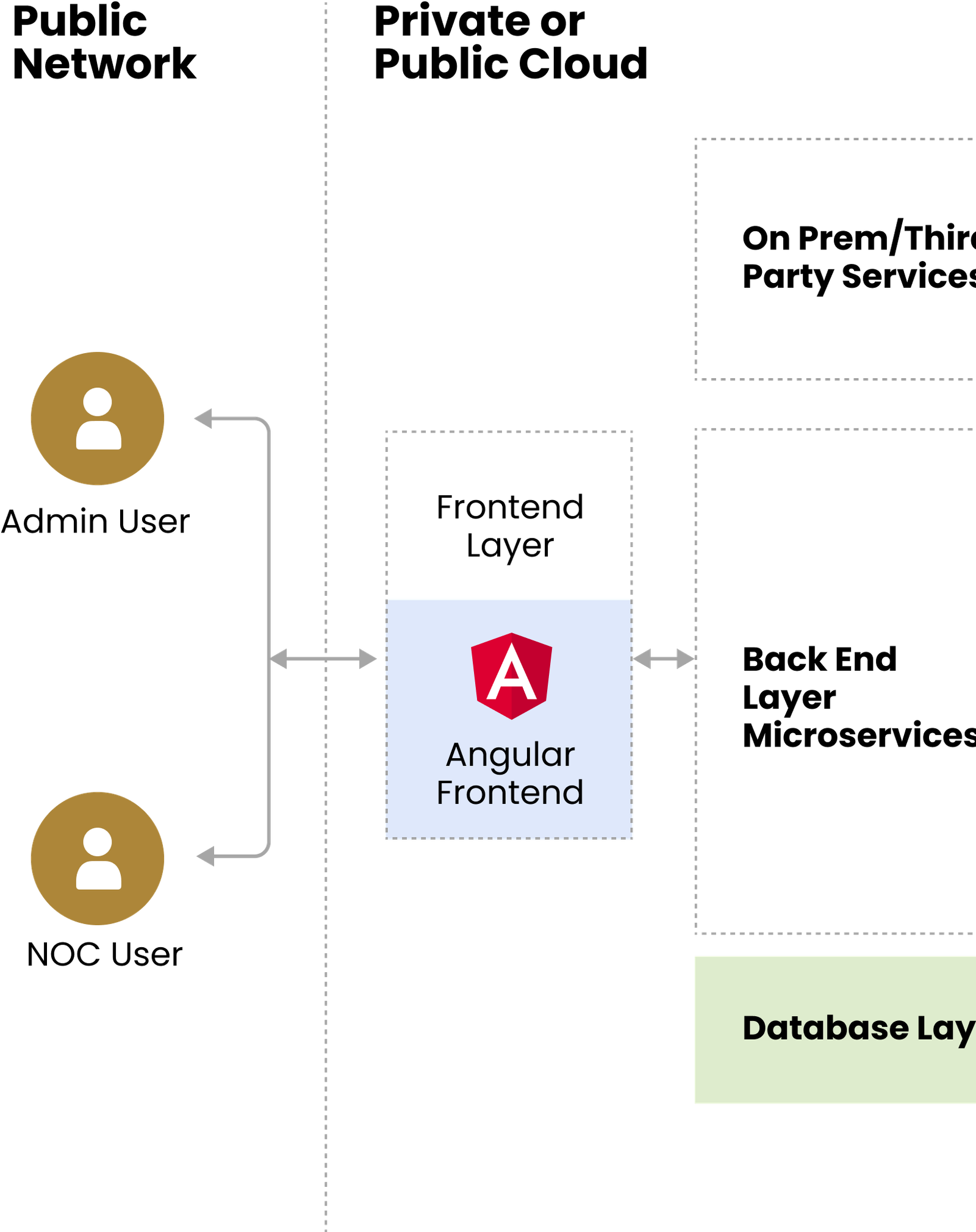

App and Data Architecture

Authentication Module

Responsible for user authentication and session management

Alarm Module

Responsible for showcasing alarm details, correlation and summaries

Ticket Module

Responsible for providing network ticket summary in a specific timespan

KPIs Module

Responsible for providing KPI by Vendor-Region-Site-Cells

AI Studio Module

Responsible for workspace management, data preprocessing and training configuration

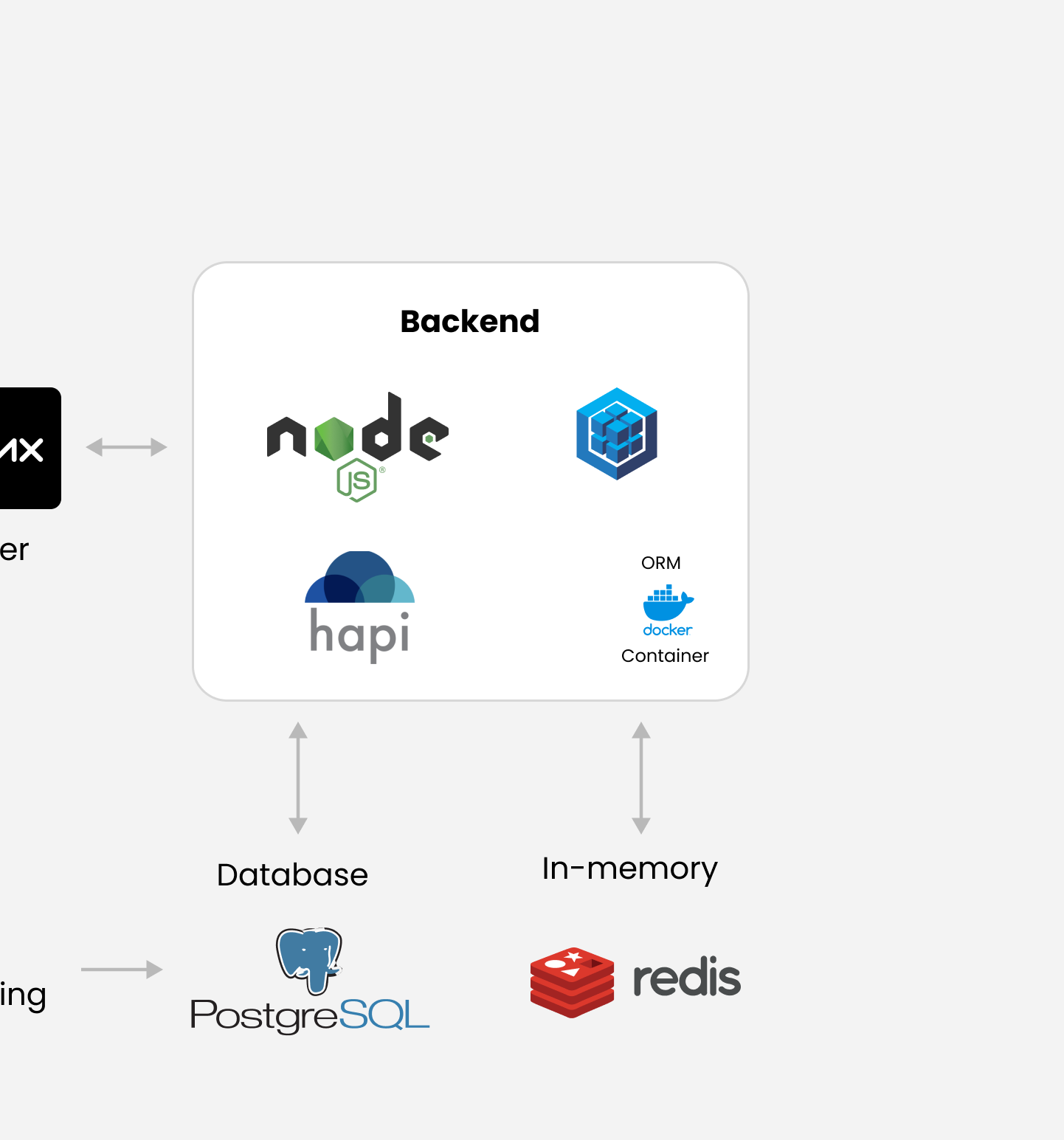

Databases

MongoDB, MySQL, Redis. A cumulation of these three ensures secure information storage, proper reporting of microservice metadata and seamless communication between various tools and services

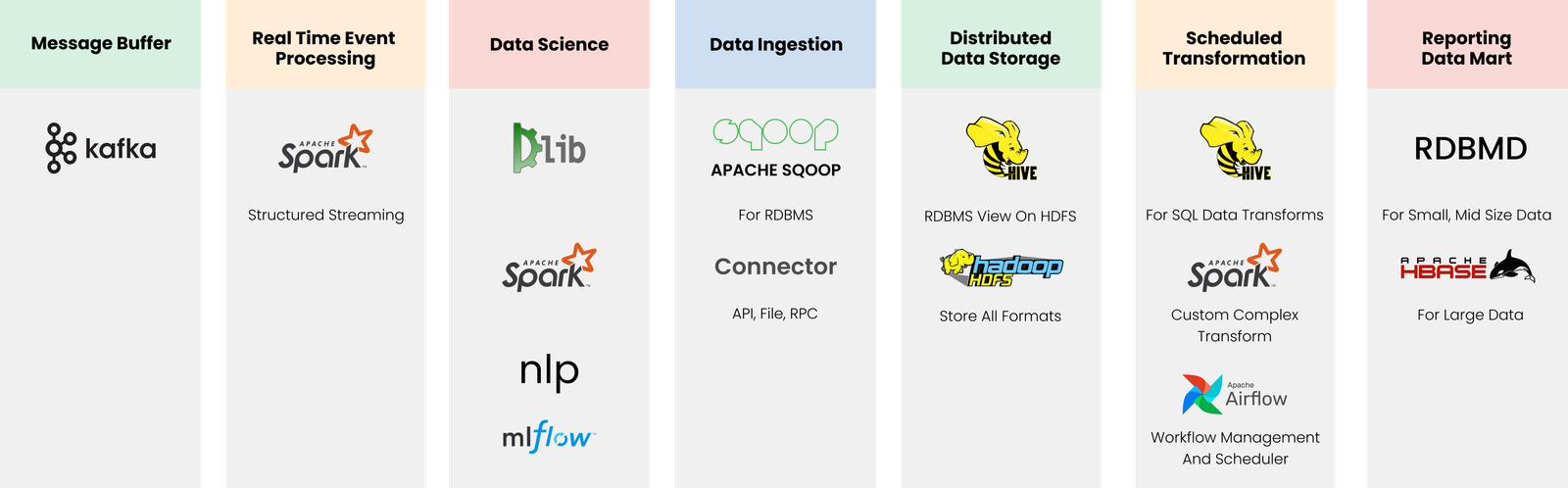

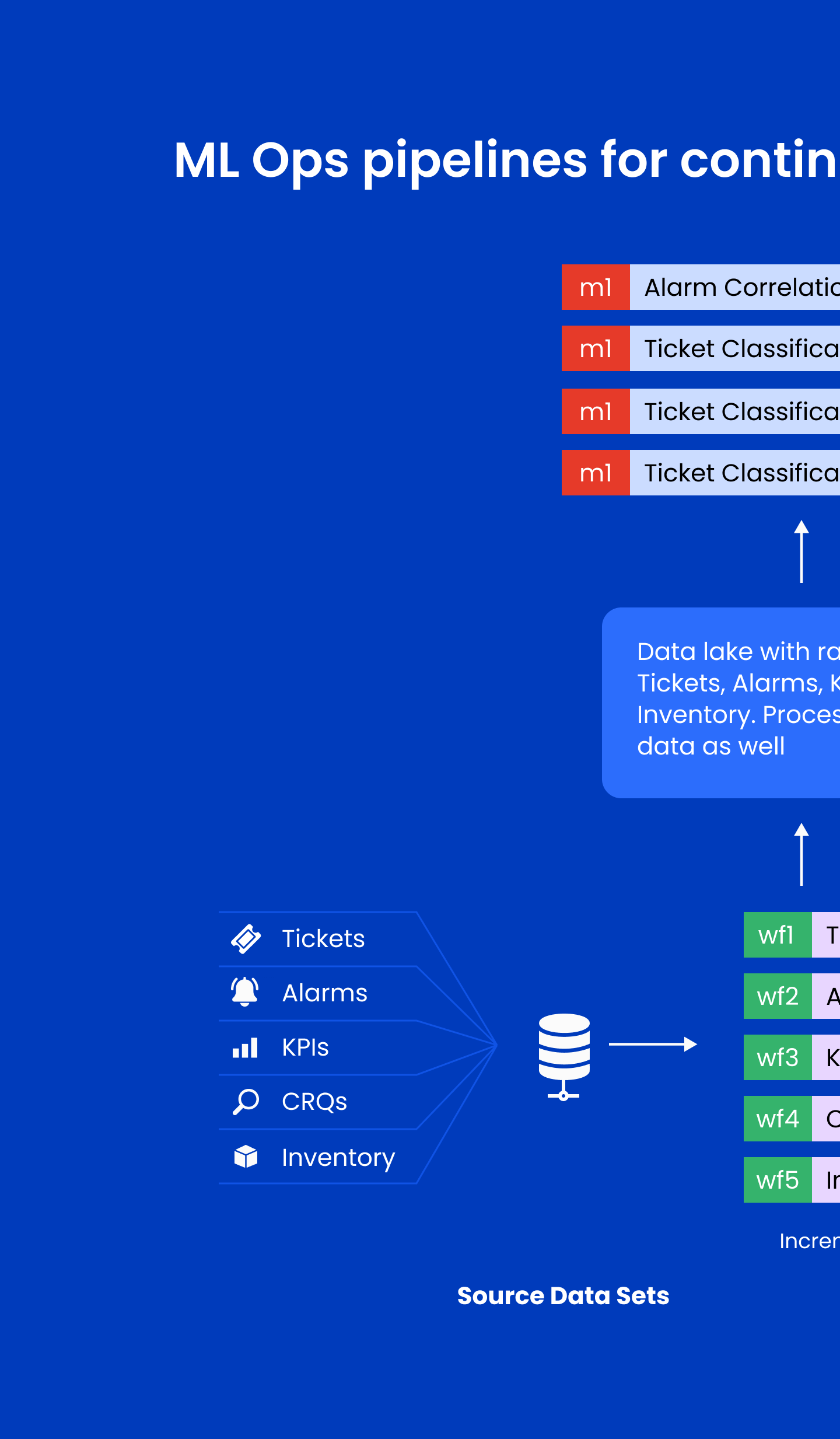

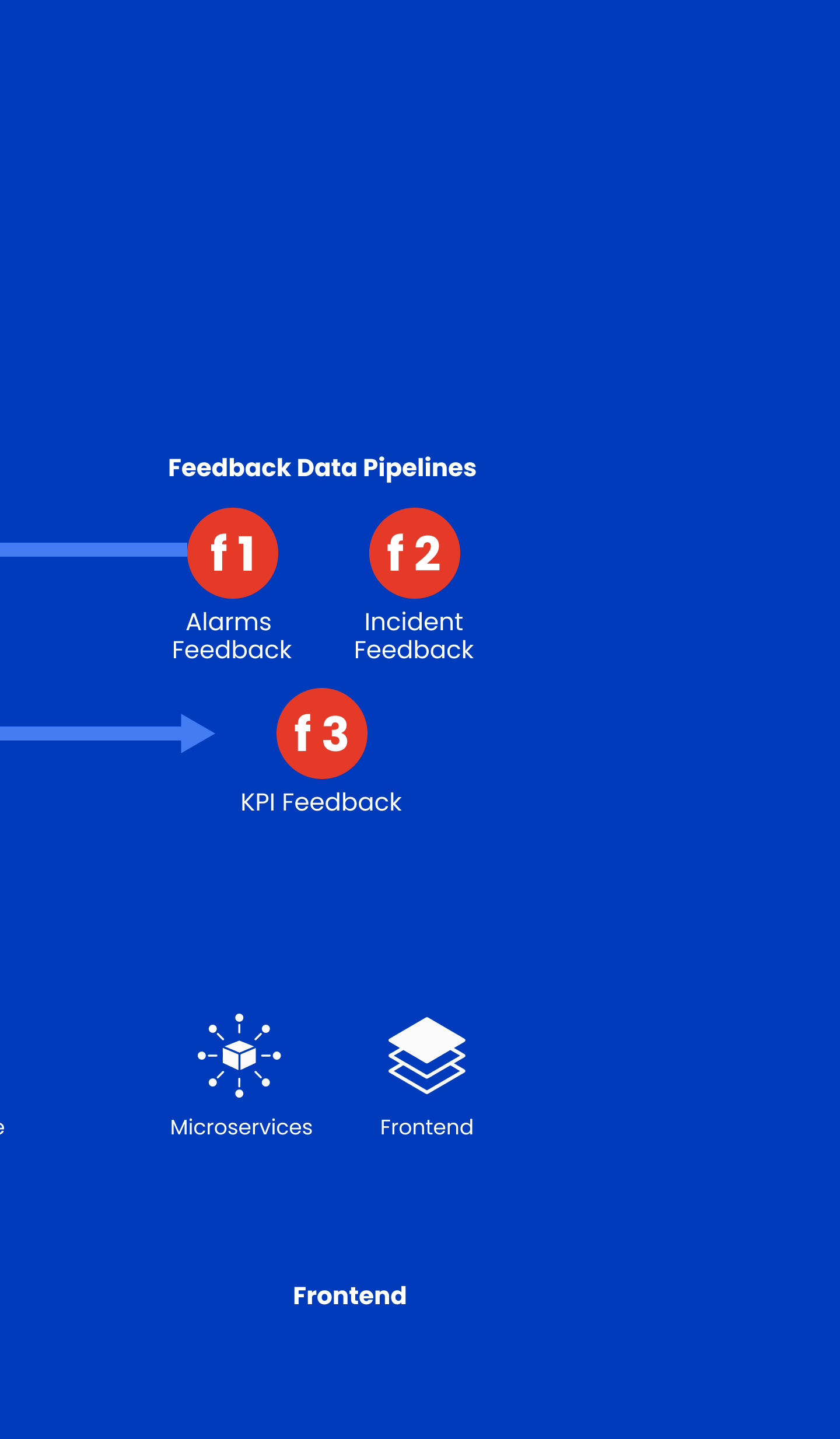

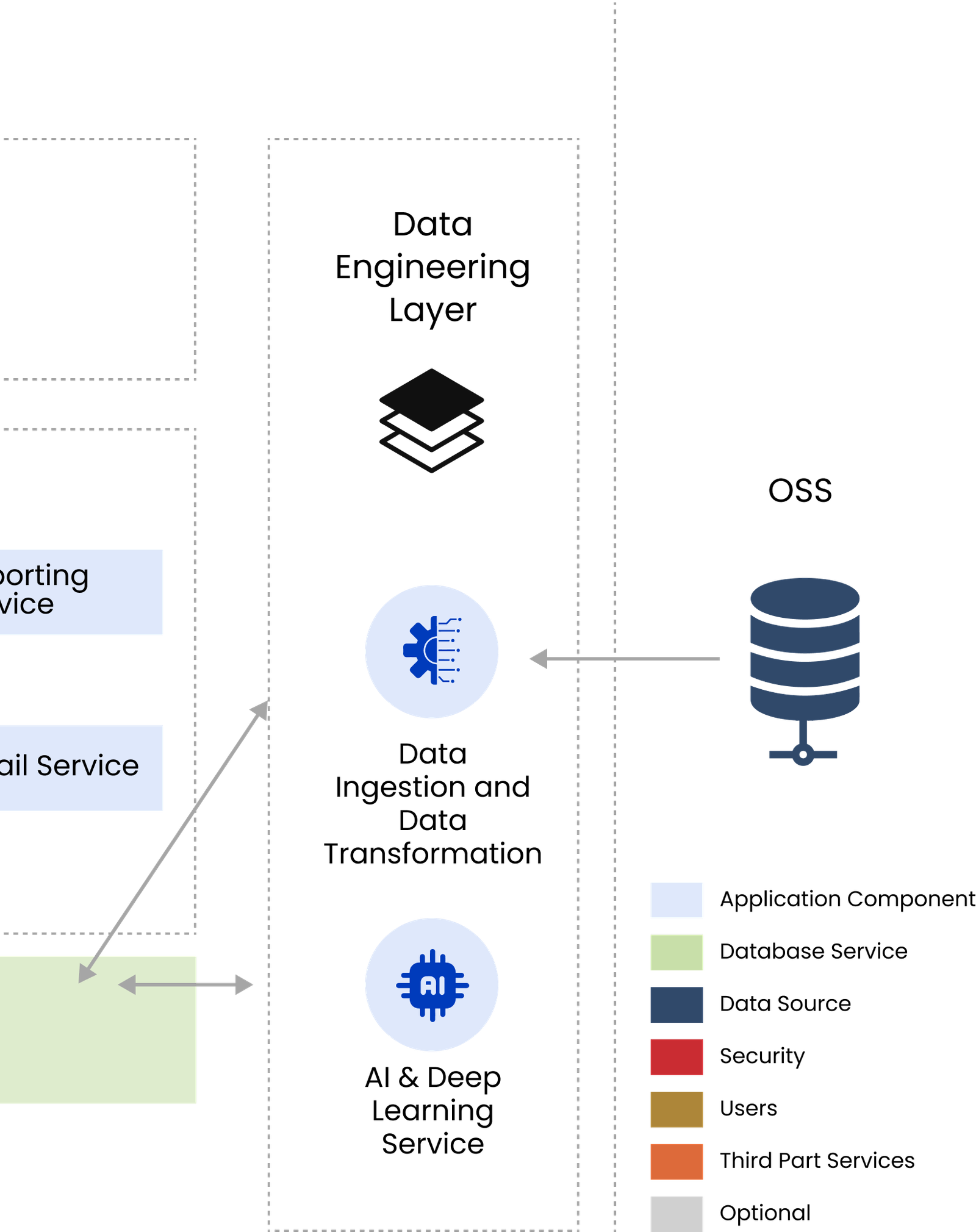

Data Engineering

This drives the correlation of alarms, resolutions, time series predictions and anomaly identification. Network outages based on voice call drop, weather conditions and data speed can thus be preemptively predicted

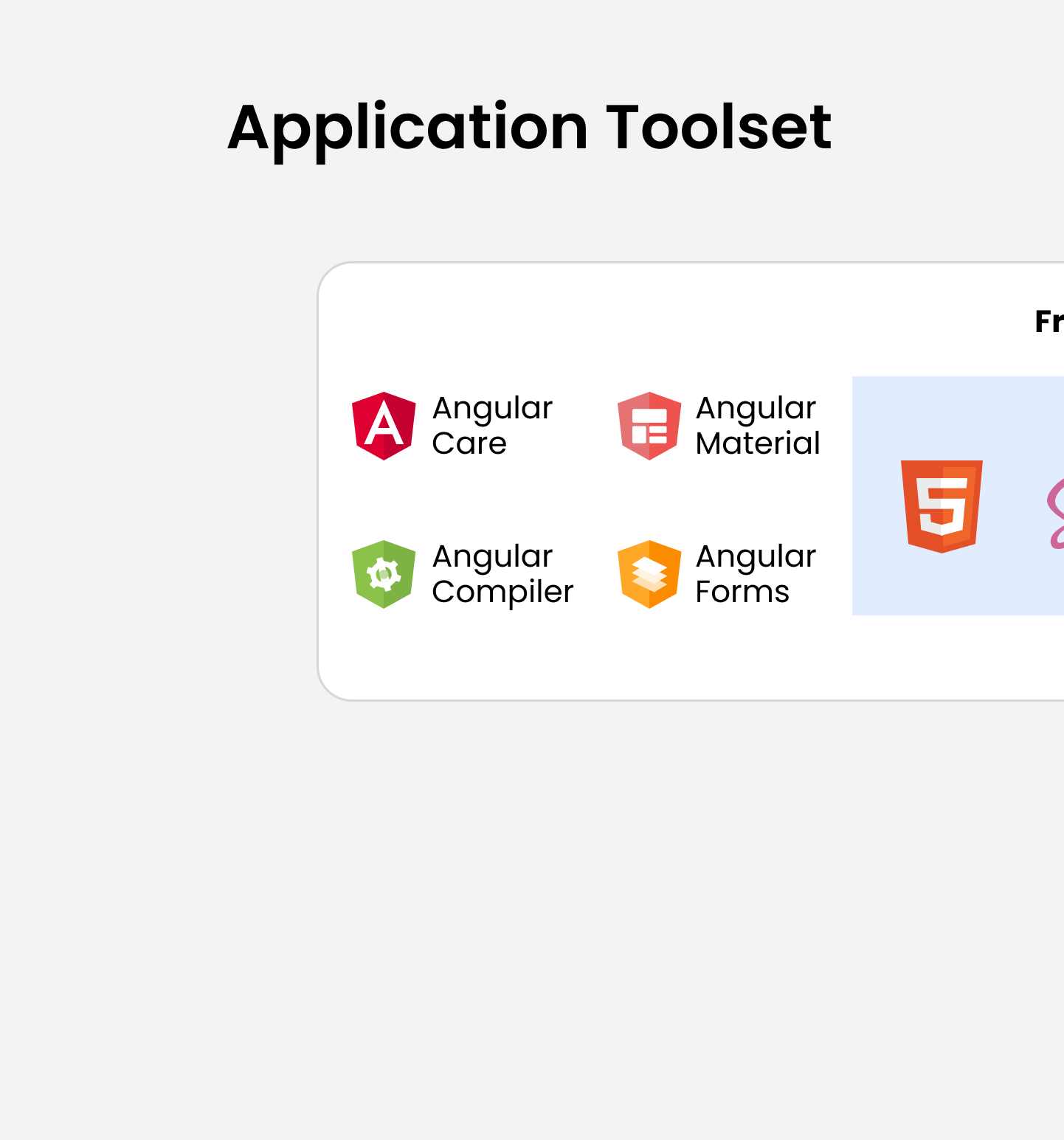

Frontend

Based on an angular framework, this is created using the Google maps library.

“Experience Of How App Development Is Evolving Nowadays,

And What Developers Can Do Better To Ensure A Seamless Frontend And Backend.”

with the correct definition and format of data, such that there is no lag between its inflow and outflow.

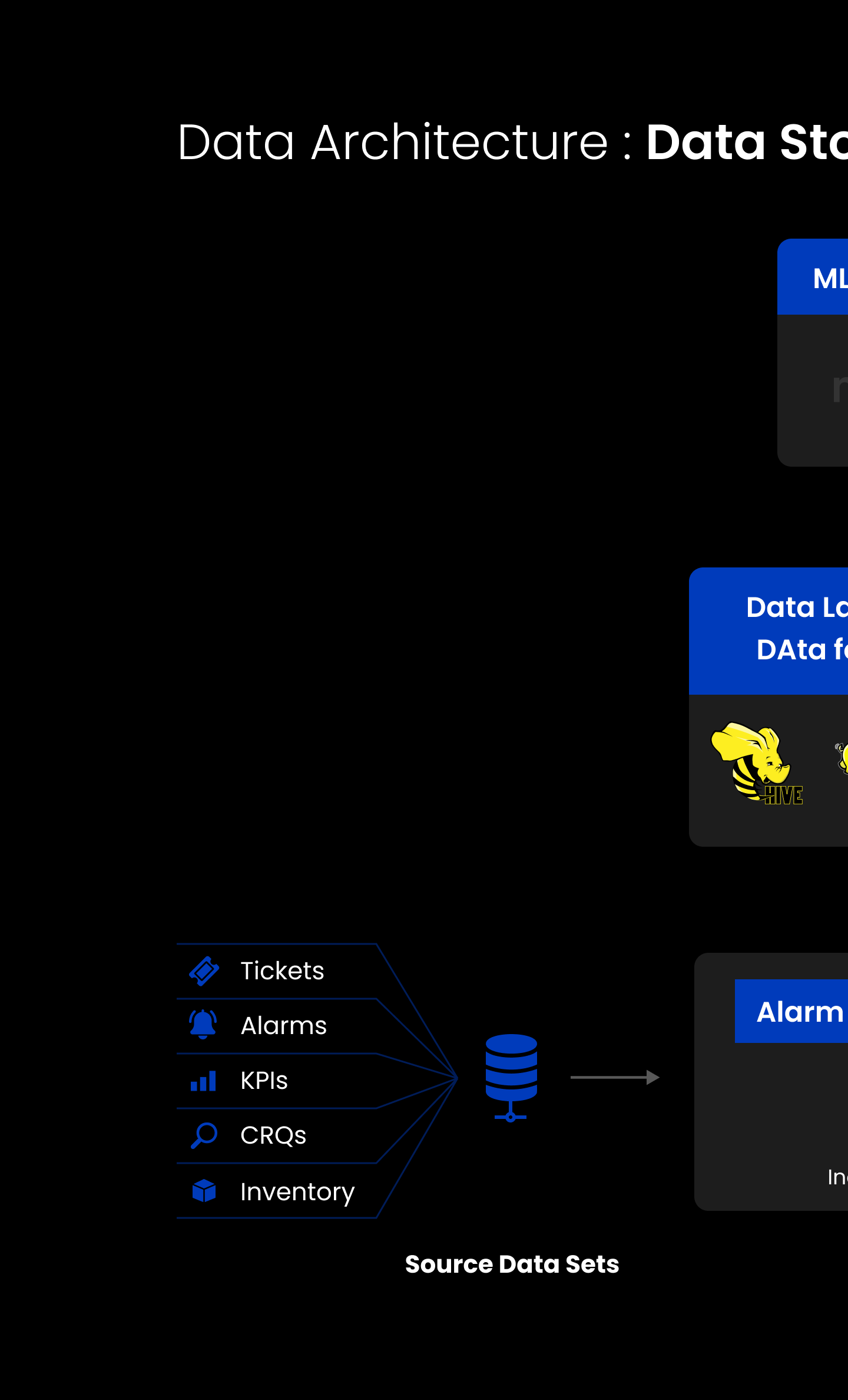

The app functions on five data processing pipelines including the following:

KPI Data Processing Pipeline

Responsible for time series prediction of KPI future value and anomaly prediction as per the ML model. It also decides the backend processing for the same

Ticket Data Processing Pipeline

Responsible for finding out RCA, resolution classification, group classification and problem category classification

Alarms Data Processing Pipeline

Responsible for pushing alarm data to kafka through the app, finalise on alarms schema, design alarm dependencies and display the results in an alarm dashboard

CRQ Data Processing Pipeline

Responsible for reading CRQ Data and corresponding the same to input CRQ records

Inventory Data Processing Pipeline

Responsible for aligning input inventory data table with lookup tables in Redis, design data processing and implementing data processing models

“The Importance Of Having Different Data Libraries In Place, Their Experience In Making This Solution What It Is And Tips For Developers To Keep In Mind For Similar Future Solutions.”

When creating web applications, developers have a plethora of tech tools, solutioning mechanisms and libraries to choose from. The final decision making needs to rest upon a thorough understanding of the tool, the ways in which they interact with the environment, specific use cases and direct impact areas.

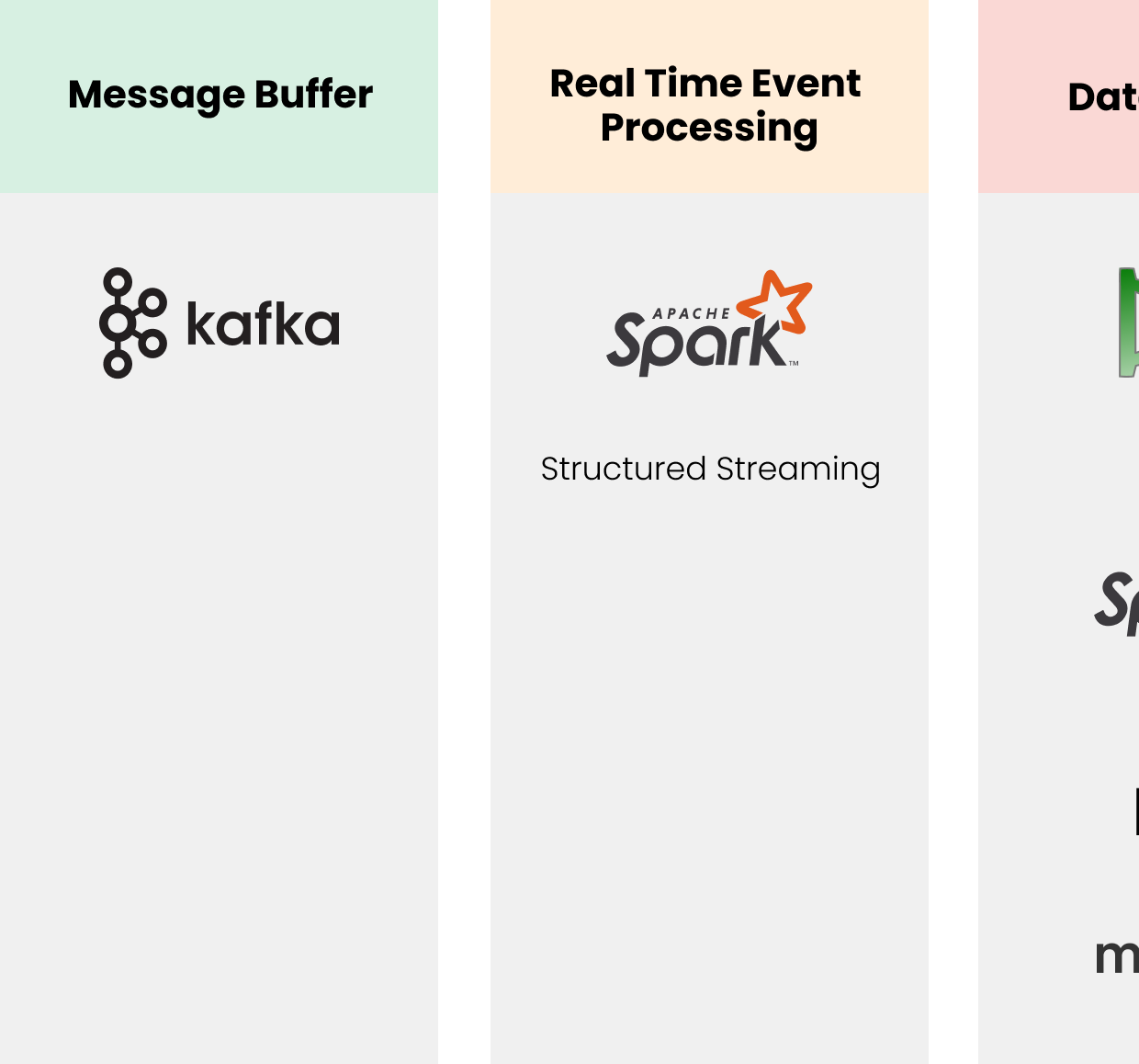

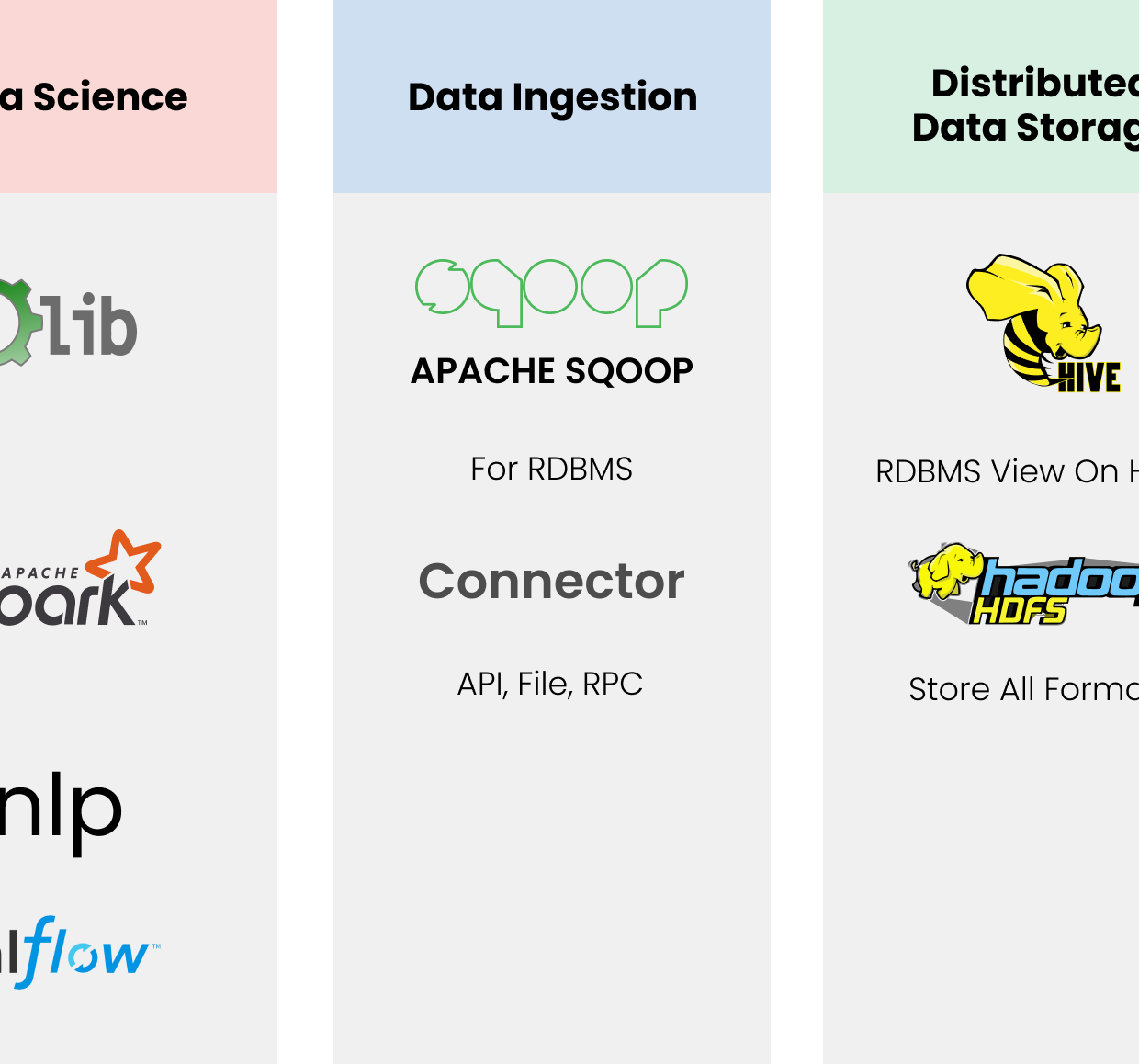

Open source Big Data Toolset

The frontend interacts with the backend using APIs and HTTP service communication. the backend is designed in a node.js framework, with code level security and continuous code scan processes for catching vulnerabilities at regular intervals.

The end result of this activity was a highly efficient singular dashboard for NOC operators, which allowed them to access information related to requests, tickets and status updates visible in real time on a single screen. This not only saves the effort on the operator’s part, but also frees up their time. An easily manageable application infrastructure built on a microservices architecture makes for unparalleled customer experiences. With a promise of end to end delivery, this solution is a holistic approach towards ensuring not just customer satisfaction but customer delight – it has the potential to bring in new business, attract more consumers and ensure seamless delivery throughout.

Company

Japan’s leading telecom

service provider

Domain

Telecom & Networking

Service

Solution Engineering

Technology

Angular, MongoDB, NodeJS, Financial Tracker, Mobile, Android, Cloud

Platform

Mobile

Auto suggestions for ticket key metrics

For reduced human error and analysis of assigned values

Automated alarm correlation and RCA

For faster alarm detection

Digitally managed KPIs and inventory insights

For a holistic view of network corresponding alarms

Authentication Module

Responsible for user authentication and session management

Alarm Module

Responsible for showcasing alarm details, correlation and summaries

Ticket Module

Responsible for providing network ticket summary in a specific timespan

KPIs Module

Responsible for providing KPI by Vendor-Region-Site-Cells

AI Studio Module

Responsible for workspace management, data preprocessing and training configuration

Databases

MongoDB, MySQL, Redis. A cumulation of these three ensures secure information storage, proper reporting of microservice metadata and seamless communication between various tools and services

Data Engineering

This drives the correlation of alarms, resolutions, time series predictions and anomaly identification. Network outages based on voice call drop, weather conditions and data speed can thus be preemptively predicted

Frontend

Based on an angular framework, this is created using the Google maps library.

KPI Data Processing Pipeline

Responsible for time series prediction of KPI future value and anomaly prediction as per the ML model. It also decides the backend processing for the same

Ticket Data Processing Pipeline

Responsible for finding out RCA, resolution classification, group classification and problem category classification

Alarms Data Processing Pipeline

Responsible for pushing alarm data to kafka through the app, finalise on alarms schema, design alarm dependencies and display the results in an alarm dashboard

CRQ Data Processing Pipeline

Responsible for reading CRQ Data and corresponding the same to input CRQ records

Inventory Data Processing Pipeline

Responsible for aligning input inventory data table with lookup tables in Redis, design data processing and implementing data processing models